|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

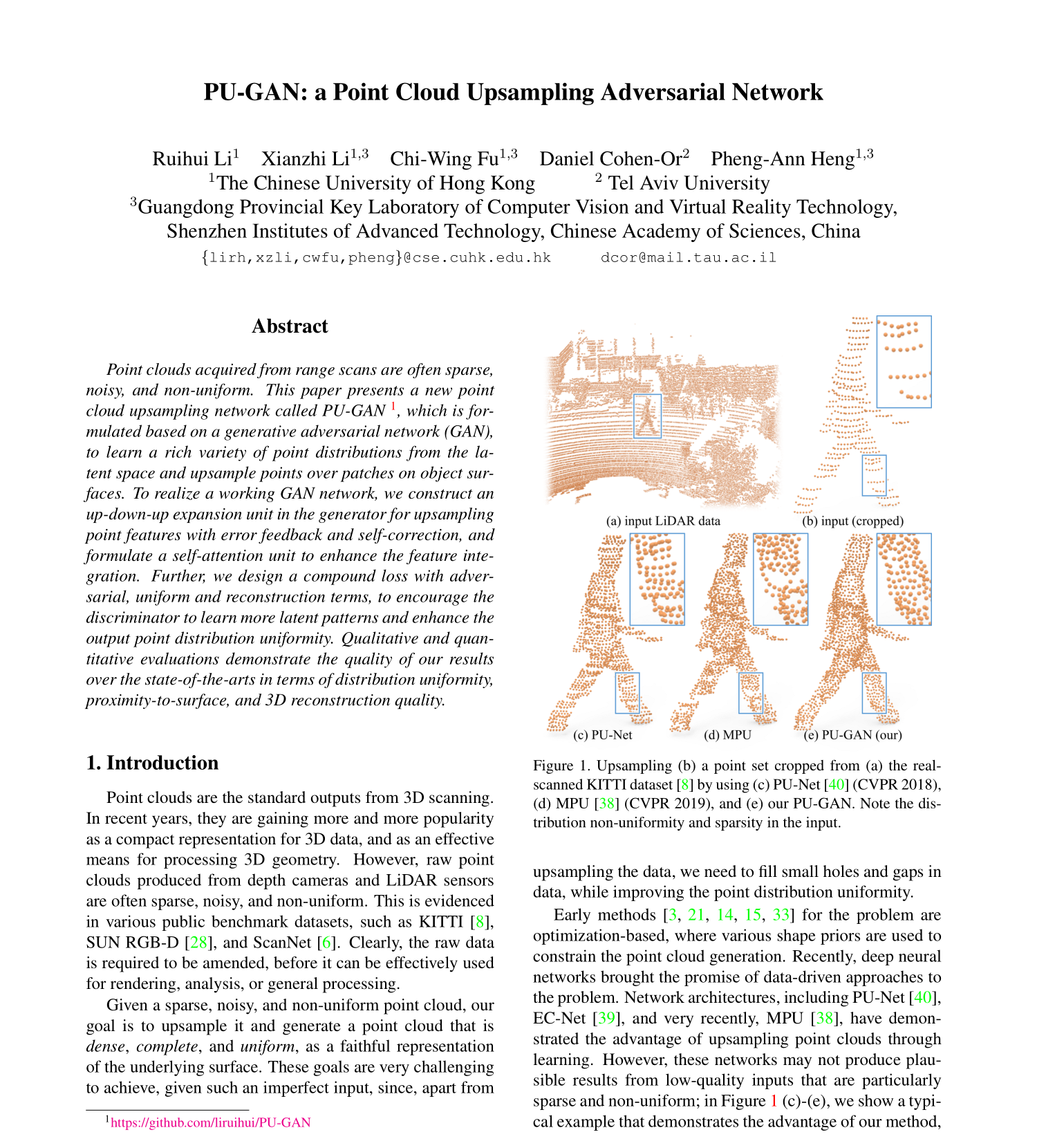

Ruihui Li, Xianzhi Li, Chi-Wing Fu, Daniel Cohen-Or, Pheng-Ann Heng. PU-GAN: a Point Cloud Upsampling Adversarial Network. In ICCV, 2019. [arxiv] [paper] [supp] |

|

|

|

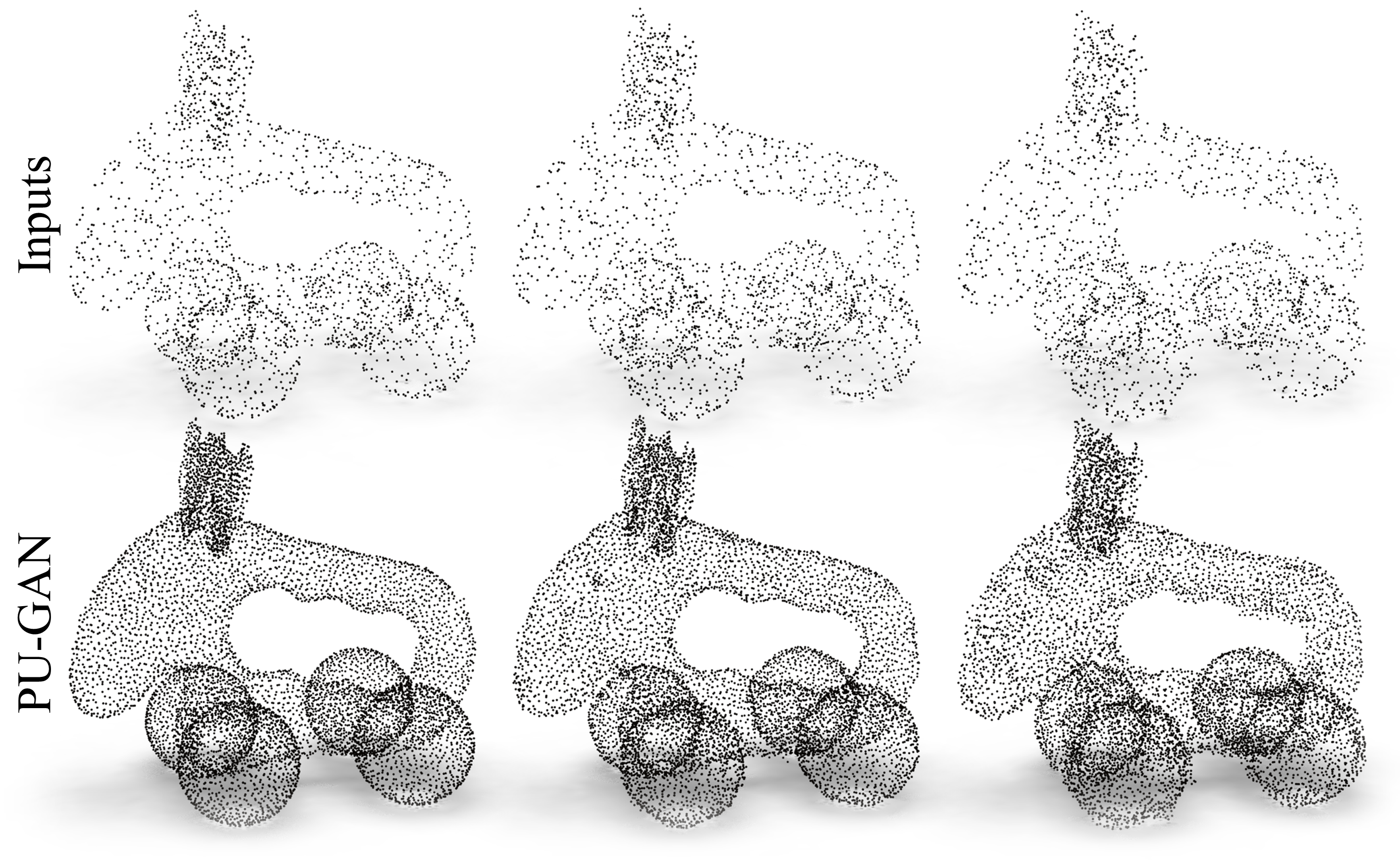

Upsampling point sets of varying noise levels |

|

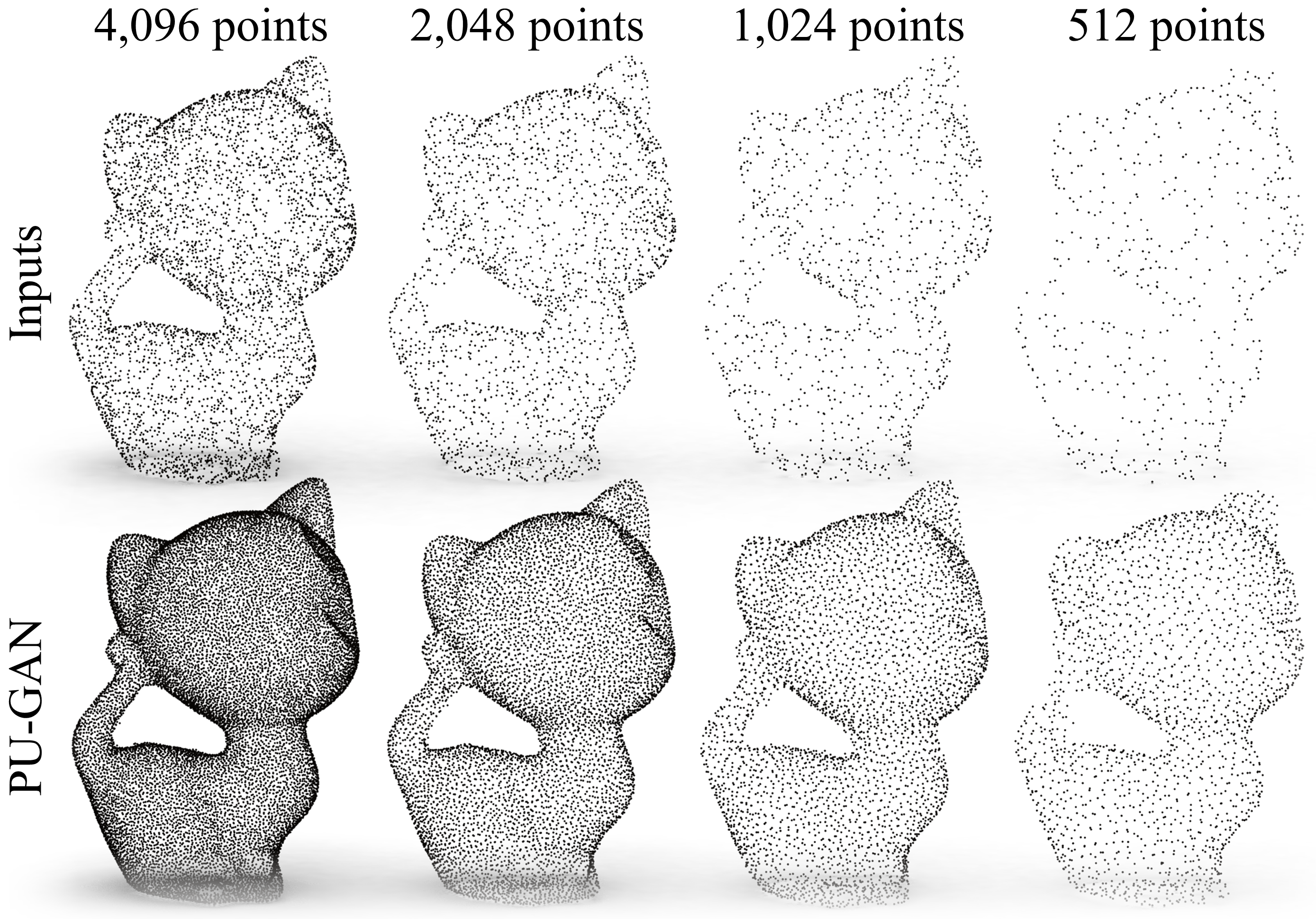

Upsampling point sets of varying sizes |

Citation

@inproceedings{li2019pugan, |

Acknowledgments |